Difference between revisions of "Elastic search"

old>Admin |

|||

| (28 intermediate revisions by 3 users not shown) | |||

| Line 4: | Line 4: | ||

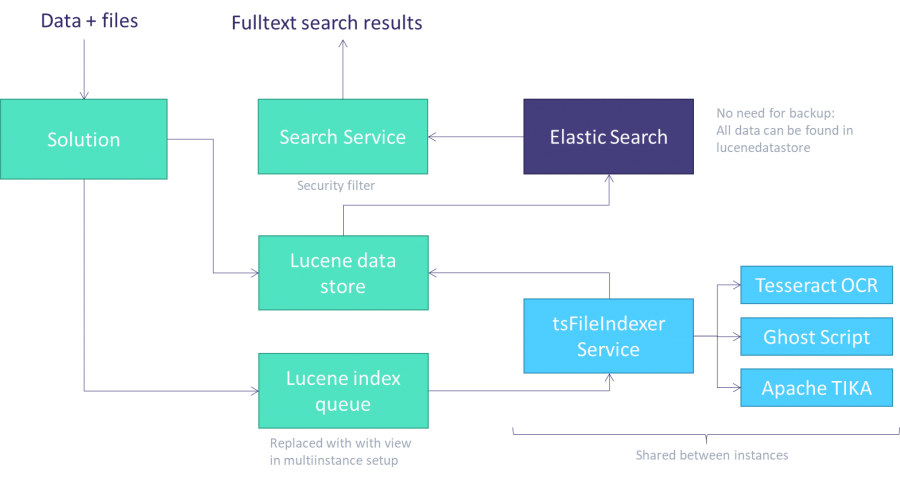

Files are indexed together with the data in the records, so a record can be found by either their record values (name, phone etc.) or by search hits in files attached to those records. Results are filtered realtime according to the current security model, so no indexing is needed if settings change. | Files are indexed together with the data in the records, so a record can be found by either their record values (name, phone etc.) or by search hits in files attached to those records. Results are filtered realtime according to the current security model, so no indexing is needed if settings change. | ||

[[File:File_indexing.png|900px]] | [[File:File_indexing.png|900px]] | ||

| Line 24: | Line 22: | ||

Follow these steps: | Follow these steps: | ||

sudo rpm --import https://packages.elastic.co/GPG-KEY-elasticsearch | sudo rpm --import https://packages.elastic.co/GPG-KEY-elasticsearch | ||

sudo curl https://gist.githubusercontent.com/nl5887/b4a56bfd84501c2b2afb/raw/elasticsearch.repo > /etc/yum.repos.d/elasticsearch.repo | sudo sh -c 'curl https://gist.githubusercontent.com/nl5887/b4a56bfd84501c2b2afb/raw/elasticsearch.repo >> /etc/yum.repos.d/elasticsearch.repo' | ||

sudo yum update -y | sudo yum update -y | ||

sudo yum install -y elasticsearch | sudo yum install -y elasticsearch | ||

sudo chkconfig elasticsearch on | sudo chkconfig elasticsearch on | ||

The service runner configurations should have updated RAM allowance by adding an extra line in the file | |||

sudo nano /etc/sysctl.conf | |||

vm.max_map_count=262144 | |||

Restart service and validate settings were updated | |||

sudo sysctl --system | |||

sysctl vm.max_map_count | |||

Run the daemon | |||

sudo service elasticsearch start | |||

==== Java 7 / Elastic search 1.7 ==== | ==== Java 7 / Elastic search 1.7 ==== | ||

| Line 45: | Line 57: | ||

curl 'http://localhost:9200/?pretty' | curl 'http://localhost:9200/?pretty' | ||

===== Handling crashes ===== | |||

ElasticSearch normally requires 1GB of memory, which is in the default memory configuation | |||

sudo nano /etc/elasticsearch/jvm.options | |||

Set the maximum memory entry to a lower value | |||

-Xmx256m | |||

Then restart the service | |||

sudo service elasticsearch restart | |||

==== Fixing: "curl: (7) Failed to connect to localhost port 9200: Connection refused" ==== | |||

In some cases the firewall needs to be congured | |||

sudo iptables -I INPUT -p tcp --dport 9200 --syn -j ACCEPT | |||

sudo iptables -I INPUT -p udp --dport 9200 -j ACCEPT | |||

sudo iptables-save | |||

=== Install TS indexing service === | === Install TS indexing service === | ||

Install war file | Install war file | ||

cd /usr/share/ | cd /usr/share/tomcat7/webapps/ | ||

sudo wget https://www.tempusserva.dk/install/tsFileIndexingService.war | sudo wget https://www.tempusserva.dk/install/tsFileIndexingService.war | ||

A couple of seconds later you can configure he data connection and paths for OCR librarys | A couple of seconds later you can configure he data connection and paths for OCR librarys | ||

sudo nano /usr/share/ | sudo nano /usr/share/tomcat7/conf/Catalina/localhost/tsFileIndexingService.xml | ||

(or depending on Linux distribution) | |||

sudo nano /etc/tomcat7/Catalina/localhost/tsFileIndexingService.xml | |||

Example configurations can be seen below | |||

Restart server after changes | Restart server after changes | ||

| Line 60: | Line 98: | ||

tstomcatrestart | tstomcatrestart | ||

==== Windows example configuration ==== | |||

<syntaxhighlight lang = "xml"> | |||

<?xml version="1.0" encoding="UTF-8"?> | |||

<Context antiJARLocking="true" path="/tsFileIndexingService"> | |||

<Resource name="jdbc/TempusServaLive" auth="Container" type="javax.sql.DataSource" | |||

maxActive="80" maxIdle="30" maxWait="2000" | |||

removeAbandoned="true" removeAbandonedTimeout="60" logAbandoned="true" | |||

validationQuery="SELECT 1" validationInterval="30000" testOnBorrow="true" | |||

username="root" password="TempusServaFTW!" driverClassName="com.mysql.jdbc.Driver" | |||

url="jdbc:mysql://localhost:3306/tslive?autoReconnect=true" | |||

/> | |||

<Parameter name="ExecutableImageMagick" value="c:\ImageMagick\convert"/> | |||

<Parameter name="ExecutableGhostscript" value="c:\Program Files\gs\gs9.20\bin\gswin64c.exe"/> | |||

<Parameter name="ExecutableTesseract" value="c:\Program Files (x86)\Tesseract-OCR\tesseract"/> | |||

<Parameter name="LanguagesTesseract" value="eng+dan"/> | |||

<Parameter name="ElasticServerAddress" value="localhost"/> | |||

</Context> | |||

</syntaxhighlight> | |||

==== Linux example configuration ==== | |||

<syntaxhighlight lang = "xml"> | |||

<?xml version="1.0" encoding="UTF-8"?> | |||

<Context antiJARLocking="true" path="/tsFileIndexingService"> | |||

<Resource name="jdbc/TempusServaLive" auth="Container" type="javax.sql.DataSource" | |||

maxActive="80" maxIdle="30" maxWait="2000" | |||

removeAbandoned="true" removeAbandonedTimeout="60" logAbandoned="true" | |||

validationQuery="SELECT 1" validationInterval="30000" testOnBorrow="true" | |||

username="root" password="TempusServaFTW!" driverClassName="com.mysql.jdbc.Driver" | |||

url="jdbc:mysql://localhost:3306/tslive?autoReconnect=true" | |||

/> | |||

<Parameter name="ExecutableImageMagick" value="/usr/bin/convert"/> | |||

<Parameter name="ExecutableGhostscript" value="/usr/bin/ghostscript"/> | |||

<Parameter name="ExecutableTesseract" value="/usr/bin/tesseract"/> | |||

<Parameter name="LanguagesTesseract" value="eng+dan"/> | |||

<Parameter name="ElasticServerAddress" value="localhost"/> | |||

</Context> | |||

</syntaxhighlight> | |||

=== Enable and test indexing in Tempus Serva === | === Enable and test indexing in Tempus Serva === | ||

| Line 104: | Line 183: | ||

Afterwards change the configurations in the file indexer | Afterwards change the configurations in the file indexer | ||

sudo nano /usr/share/ | sudo nano /usr/share/tomcat7/conf/Catalina/localhost/tsFileIndexingService.xml | ||

The values should be | The values should be | ||

| Line 113: | Line 192: | ||

After changing the values restart the server. | After changing the values restart the server. | ||

== | == Lucene data store and services == | ||

'''Lucene data store''' will contain lines for each record and record file in the system. | |||

All data in '''Lucene data store''' will be sent to Elastic search. Every time a record is updated an entry is made in '''Lucene data store''', and by default the data is sent synchroniously to ElasticSearch (fulltextBatchProcessBlobs). | |||

Files are instead put in the '''Lucene file queue'''. By default the indexer is notified imidiately, a will start executing the '''Lucene file queue'''. When finished each translated text is written back into the '''Lucene data store''' and deleted from the '''Lucene file queue'''. Data is by default be sent to Elastic search right away (fulltextBatchProcessFiles). | |||

TS contains 2 services | |||

* Data index builder | |||

* File index builder | |||

These services sends data from '''Lucene data store''' to ElasticSearch. As mentioned this will normally be carried out automatically / synchroniously, unless some kind of error occurs - like Elastic being offline etc. In that case unprocessed items queue up: In the datastore, file queue or both. | |||

Running the services will handle everything in the queues. | |||

Consider having '''Data index builder''' running every day (1440 mins) to clean up the queue now and then. | |||

== Trouble shooting == | == Trouble shooting == | ||

Latest revision as of 11:09, 26 May 2023

Introduction

Adding Elastic search to you existing TS installation, will provide you with freetext searches in data and files.

Files are indexed together with the data in the records, so a record can be found by either their record values (name, phone etc.) or by search hits in files attached to those records. Results are filtered realtime according to the current security model, so no indexing is needed if settings change.

Install

In order to index records and files you will need to complete these steps

- Install standalone Elastic search server

- Install and configure Tempus Serva file indexing

- Configure the Tempus Serva installation

Finally you may want to install optional components to handle OCR (scanned PDF's and images)

Install Elastic search

Java 8 / Elastic search 6

This is the recommended version but requires Java 8.

Follow these steps:

sudo rpm --import https://packages.elastic.co/GPG-KEY-elasticsearch sudo sh -c 'curl https://gist.githubusercontent.com/nl5887/b4a56bfd84501c2b2afb/raw/elasticsearch.repo >> /etc/yum.repos.d/elasticsearch.repo' sudo yum update -y sudo yum install -y elasticsearch sudo chkconfig elasticsearch on

The service runner configurations should have updated RAM allowance by adding an extra line in the file

sudo nano /etc/sysctl.conf vm.max_map_count=262144

Restart service and validate settings were updated

sudo sysctl --system sysctl vm.max_map_count

Run the daemon

sudo service elasticsearch start

Java 7 / Elastic search 1.7

This version is an alternate version.

Install and unpack files

sudo wget https://download.elastic.co/elasticsearch/elasticsearch/elasticsearch-1.7.6.tar.gz tar -xvf elasticsearch-1.7.6.tar.gz sudo rm elasticsearch-1.7.6.tar.gz

Run as a daemon

elasticsearch-1.7.6/bin/elasticsearch -d

Test that the service is running

curl 'http://localhost:9200/?pretty'

Handling crashes

ElasticSearch normally requires 1GB of memory, which is in the default memory configuation

sudo nano /etc/elasticsearch/jvm.options

Set the maximum memory entry to a lower value

-Xmx256m

Then restart the service

sudo service elasticsearch restart

Fixing: "curl: (7) Failed to connect to localhost port 9200: Connection refused"

In some cases the firewall needs to be congured

sudo iptables -I INPUT -p tcp --dport 9200 --syn -j ACCEPT sudo iptables -I INPUT -p udp --dport 9200 -j ACCEPT sudo iptables-save

Install TS indexing service

Install war file

cd /usr/share/tomcat7/webapps/ sudo wget https://www.tempusserva.dk/install/tsFileIndexingService.war

A couple of seconds later you can configure he data connection and paths for OCR librarys

sudo nano /usr/share/tomcat7/conf/Catalina/localhost/tsFileIndexingService.xml

(or depending on Linux distribution)

sudo nano /etc/tomcat7/Catalina/localhost/tsFileIndexingService.xml

Example configurations can be seen below

Restart server after changes

tstomcatrestart

Windows example configuration

<?xml version="1.0" encoding="UTF-8"?>

<Context antiJARLocking="true" path="/tsFileIndexingService">

<Resource name="jdbc/TempusServaLive" auth="Container" type="javax.sql.DataSource"

maxActive="80" maxIdle="30" maxWait="2000"

removeAbandoned="true" removeAbandonedTimeout="60" logAbandoned="true"

validationQuery="SELECT 1" validationInterval="30000" testOnBorrow="true"

username="root" password="TempusServaFTW!" driverClassName="com.mysql.jdbc.Driver"

url="jdbc:mysql://localhost:3306/tslive?autoReconnect=true"

/>

<Parameter name="ExecutableImageMagick" value="c:\ImageMagick\convert"/>

<Parameter name="ExecutableGhostscript" value="c:\Program Files\gs\gs9.20\bin\gswin64c.exe"/>

<Parameter name="ExecutableTesseract" value="c:\Program Files (x86)\Tesseract-OCR\tesseract"/>

<Parameter name="LanguagesTesseract" value="eng+dan"/>

<Parameter name="ElasticServerAddress" value="localhost"/>

</Context>

Linux example configuration

<?xml version="1.0" encoding="UTF-8"?>

<Context antiJARLocking="true" path="/tsFileIndexingService">

<Resource name="jdbc/TempusServaLive" auth="Container" type="javax.sql.DataSource"

maxActive="80" maxIdle="30" maxWait="2000"

removeAbandoned="true" removeAbandonedTimeout="60" logAbandoned="true"

validationQuery="SELECT 1" validationInterval="30000" testOnBorrow="true"

username="root" password="TempusServaFTW!" driverClassName="com.mysql.jdbc.Driver"

url="jdbc:mysql://localhost:3306/tslive?autoReconnect=true"

/>

<Parameter name="ExecutableImageMagick" value="/usr/bin/convert"/>

<Parameter name="ExecutableGhostscript" value="/usr/bin/ghostscript"/>

<Parameter name="ExecutableTesseract" value="/usr/bin/tesseract"/>

<Parameter name="LanguagesTesseract" value="eng+dan"/>

<Parameter name="ElasticServerAddress" value="localhost"/>

</Context>

Enable and test indexing in Tempus Serva

Set the following configurations to true

- fulltextIndexData

- fulltextIndexFile

Also add port 8080 to the following URL

- fulltextFileHandlerURL

Update any record in the TS installation

Tjeck the index is created and that there is a mapping for the solution

curl 'http://localhost:9200/tempusserva/?pretty'

Next validate that records are found when searched for (replace * with a valid string)

curl 'http://localhost:9200/tempusserva/_search?pretty&q=*'

Finally validate that the Tempus Serva wrapper also works

http://<server>/TempusServa/fulltextsearch?subtype=4&term=*

Optional OCR components

Some libraries must be installed (ghostscript is probably allready installed)

sudo yum install ImageMagick sudo yum install ghostscript

Also install tesseract

CentOS/Fedora

sudo yum install tesseract-ocr

Amazon linux

sudo yum --enablerepo=epel --disablerepo=amzn-main install libwebp sudo yum --enablerepo=epel --disablerepo=amzn-main install tesseract

Afterwards change the configurations in the file indexer

sudo nano /usr/share/tomcat7/conf/Catalina/localhost/tsFileIndexingService.xml

The values should be

- /usr/bin/tesseract

- /usr/bin/convert

- /usr/bin/ghostscript

After changing the values restart the server.

Lucene data store and services

Lucene data store will contain lines for each record and record file in the system.

All data in Lucene data store will be sent to Elastic search. Every time a record is updated an entry is made in Lucene data store, and by default the data is sent synchroniously to ElasticSearch (fulltextBatchProcessBlobs).

Files are instead put in the Lucene file queue. By default the indexer is notified imidiately, a will start executing the Lucene file queue. When finished each translated text is written back into the Lucene data store and deleted from the Lucene file queue. Data is by default be sent to Elastic search right away (fulltextBatchProcessFiles).

TS contains 2 services

- Data index builder

- File index builder

These services sends data from Lucene data store to ElasticSearch. As mentioned this will normally be carried out automatically / synchroniously, unless some kind of error occurs - like Elastic being offline etc. In that case unprocessed items queue up: In the datastore, file queue or both.

Running the services will handle everything in the queues.

Consider having Data index builder running every day (1440 mins) to clean up the queue now and then.

Trouble shooting

Status on the file indexing

The file indexer has a stus page that will display information about the state of the indexer

https://<server>/tsFileIndexingService/execute

The page also constains a goodword "HEALTHY" taht is displayed if the process has not exceeded the specified timeouts.

Controlling timeouts

Timeouts are specified in seconds and should be tuned to CPU size and quality of documents

<Parameter name="TimeoutTesseract" value="600"/> <Parameter name="TimeoutGhostscript" value="60"/>

Poor quality documents on virtualized environments can easily consume about a minute per page.

Debugging OCR proces

By default output from the external components are written to logfiles, which can be disabled by adding this option

<Parameter name="SuppressCommandOutput" value="0"/>

Note that there is a switch in configuration file (context.xml) which can disable file deletion on the server

<Parameter name="DisableFileCleanup" value=""/>

Reindexing

Reindex files

Before reindexing starts may clean up the index (this is optional)

DELETE FROM lucenedatastore WHERE FieldID > 0;

To reindex execute the statement below using the following parameters

- schema of the database (example: "tslive")

- file table of the solution (example: "data_solution_file")

INSERT INTO lucenefilequeue (application,tablename,FileID) SELECT 'tslive', 'data_solution_file', f.ID as FileID FROM data_solution_file as f WHERE f.IsDeleted = 0;

After executing the statement execute the indexing service and wait patiently

Rewrite index

I case your Elastic Search is lost or corrupted it is quite easy to add the whole database to Elastic search

UPDATE lucenedatastore SET IsProcessed = 0;

Note this will just add all data once more - there is no indexing or OCR being carried out.